How to Avoid CAPTCHAs? Multiple Ways!

In this article, I’ll dive into the different types of CAPTCHA challenges, their purpose, and how we can bypass them. I’ll explore practical techniques that web scrapers can use to overcome CAPTCHAs. Whether you’re a pro at web scraping or just starting out, learning to get past CAPTCHAs is key for efficient data collection and analysis.

Web scraping helps us collect and analyze data from various websites. However, websites are increasingly using anti-scraping technologies like CAPTCHA, making our job tougher. CAPTCHAs are designed to stop automated bots and scripts from accessing sites.

Join me as we uncover the best practices to handle CAPTCHAs and make web scraping a smoother process.

What is a CAPTCHA?

CAPTCHA stands for Completely Automated Public Turing test to tell Computers and Humans Apart. It is a challenge-response test designed to separate humans from automated programs, known as bots.

CAPTCHA works well to stop bots from using web services. It ensures that real people, not automated scripts or spam bots, access these services. This is particularly useful for preventing web scraping bots from collecting data without permission. By making users prove they are human, CAPTCHAs protect websites from unwanted automated access.

These tests are a common sight online. They help maintain the security and integrity of web services. Whether it’s checking a box, identifying images, or typing a word, CAPTCHAs are a key defense against bots. Understanding CAPTCHAs is important for anyone involved in web scraping or online security.

Types of CAPTCHAs

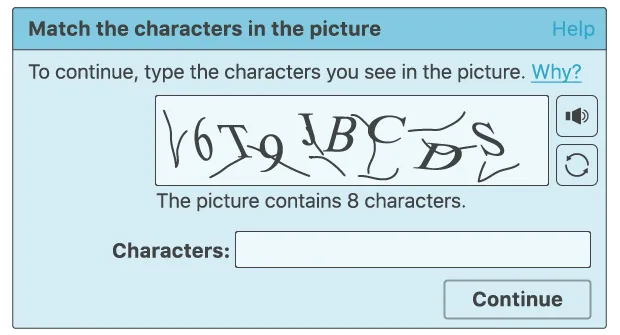

Here are some common types of CAPTCHA challenges you might encounter:

Text CAPTCHAs These require users to type the characters shown in an image. It’s a simple but effective way to verify humans.

3D CAPTCHAs This newer type uses 3D characters, making it harder for bots to recognize and solve them.

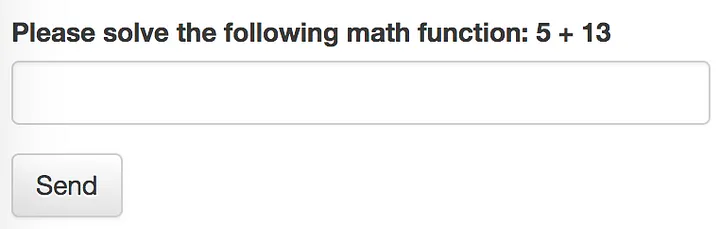

Math Challenges Users must solve a basic math equation to pass this test. It’s straightforward and ensures human interaction.

Image CAPTCHAs Users identify specific objects within a grid of images. This is a popular and effective method.

Invisible and Passive CAPTCHAs These are more subtle and hidden within the website’s code. Invisible CAPTCHAs run a JavaScript challenge when you click submit, checking if your browser behaves like a human’s.

Passive CAPTCHAs are time-based checks. For example, typing too quickly can be flagged as suspicious.

Sometimes, websites use a combination of these methods to increase security. Understanding these types helps you prepare for various web scraping challenges.

How to Avoid CAPTCHA and reCAPTCHA When Scraping?

Web scrapers use different techniques to bypass CAPTCHAs. Here are the most effective methods:

Avoiding Direct Links

Websites often detect bots through direct referral links. When a site has many direct links leading to it, it may use CAPTCHAs to block unwanted requests.

To avoid this, use the referrer’s header. This makes it appear as if your request came from another page, not directly.

By setting a referrer header, you can trick the target website into thinking your traffic is legitimate. This method helps you bypass CAPTCHA defenses and access the data you need.

Using referrer headers is a simple yet effective way to make your web scraping efforts look more natural and avoid detection.

Use Proxies

One effective way to avoid CAPTCHAs is by using rotating residential proxies. Rotating proxies change your IP address for each request, making it hard for websites to identify your real IP.

You can choose to switch your IP with each request or at set intervals. This method helps you blend in with normal traffic, reducing the chance of triggering CAPTCHAs.

Many providers offer residential rotating proxies and affordable, reliable datacenter proxies. These tools help you avoid CAPTCHA defenses and keep your web scraping running smoothly. Using rotating proxies is a simple and powerful way to make your web scraping efforts more successful.

Avoid Honeypots

Honeypots are hidden elements on websites designed to catch bots. If your bot interacts with a honeypot, it will be detected and likely banned.

To avoid honeypots, check the CSS attributes of site elements before interacting with them. Ensure the elements are not hidden or disabled. Once you verify an element is safe, you can proceed.

Honeypots aren’t very common on most sites, but it’s wise to stay cautious. By being careful and checking for honeypots, you can avoid detection and keep your web scraping activities secure and efficient.

Pacing Your Requests

Websites can spot bots by their behavior. Bots usually act faster and more predictably than humans, which can trigger CAPTCHA.

To avoid this, use random time intervals between your bot’s requests. This helps make your activity seem more like a real user’s.

Additionally, add delays between consecutive requests. This reduces the risk of CAPTCHAs and prevents overloading the site. By mimicking natural browsing patterns and spacing out requests, you can keep your bot under the radar and avoid detection. This approach ensures your web scraping activities are smooth and effective.

Rotating User Agents

Some websites allow certain bots, like search engine spiders, to crawl their content. However, your bot isn’t one of these, so you need to hide its identity.

Replace your user agent with one from a popular browser or a supported bot. This helps your bot bypass CAPTCHAs. But just changing your user agent once won’t be enough. Websites continually update their defenses. You need to rotate through a variety of user agent strings while making requests.

Also, check the headers of your browser and make sure your bot forwards them. This ensures your bot mimics real user behavior and avoids detection. By rotating user agents and forwarding browser headers, you can keep your web scraping efforts effective and undetected.

Using VPNs and Proxy Servers

VPNs and proxies help disguise your traffic behind another IP address. They are effective tools for avoiding Google’s Recaptcha.

When choosing between a VPN and a proxy, remember that public options are risky. Free servers or IPs are easily detected and blocked by Google. Paid VPN services are more reliable as they frequently update server locations to avoid detection.

For proxies, it’s best to buy from a reputable provider like Bright Data. They offer a wide selection of private proxies, ideal for bypassing Google Captchas. You can choose proxies specifically designed for search engines or Google to ensure smooth web scraping without interruptions. Reliable proxies and VPNs are key to avoiding Recaptcha and maintaining access.

Using a Captcha Solver

You can use paid CAPTCHA decoding services that employ human operators to solve CAPTCHAs. These services are known for their fast and reliable solutions, with costs typically around 50 cents for 1,000 Recaptcha v2 puzzles.

Google has also introduced Recaptcha v3, which uses advanced algorithms to determine if you are human without interrupting your work, resulting in fewer CAPTCHA challenges for bots.

Another option for the best CAPTCHA solvers supports multiple programming languages like PHP, JavaScript, Golang, C#, Java, and Python. These services boast high success rates and can solve millions of CAPTCHAs per minute, ensuring your scraper runs smoothly and continuously.

Conclusion

Avoiding CAPTCHAs is a crucial skill for effective web scraping. We explored different CAPTCHA types and their role in blocking automated access. Overcoming these challenges involves practical techniques like using CAPTCHA-solving services, employing headless browsers, or integrating machine learning models.

Remember, ethical considerations and legal compliance are paramount when scraping websites. Always respect the site’s terms of service and privacy policies.

By mastering these methods, you can streamline your data collection process, saving time and effort. Stay adaptive, as CAPTCHA technologies continually evolve. Keep honing your skills and tools to ensure you stay ahead in the ever-changing world of web scraping.