How to Scrape Websites Using Google Sheets

I’ll walk you through different ways you can use Google Sheets to scrape data. There are functions like IMPORTXML, IMPORTHTML, IMPORTDATA, IMPORTFEED, and IMPORTRANGE, each suited for different tasks. These tools let you pull data from web pages, structured tables, or RSS feeds. Although there are limitations, they offer a good starting point for basic data extraction.

Alternative Solution — Automated Web Scrapers

If the scale of your project doesn’t support Google Sheets usage, or the data you are trying to retrieve is being protected by advanced anti-scraping technologies, try using some of the following web scraping tools (some are free or offer a free trial which should be enough for a 1 time project):

- Bright Data — Best overall for advanced scraping; features extensive proxy management and reliable APIs.

- Octoparse — User-friendly no-code tool for automated data extraction from websites.

- ScrapingBee — Developer-oriented API that handles proxies, browsers, and CAPTCHAs efficiently.

- Scrapy — Open-source Python framework ideal for data crawling and scraping tasks.

- ScraperAPI — Handles tough scrapes with advanced anti-bot technologies; great for developers.

- Apify — Versatile platform offering ready-made scrapers and robust scraping capabilities.

I am not affiliated with any of these services.

Read more about each service here. Let’s continue with our Google Sheets scraping guide now.

Google Sheets can be a powerful tool for web scraping, allowing users to extract and organize data directly from websites. You can automate data collection and streamline analysis by using specific built-in functions. Here are five essential Google Sheets functions to get started with web scraping.

Using IMPORTXML to Extract Structured Data

The IMPORTXML function allows users to import data from structured sources like XML, HTML, or RSS feeds by using XPath queries. It effectively extracts specific elements such as headings, prices, or dates. The syntax for this function is:

=IMPORTXML(“URL”, “XPath_query”)

For example, to extract blog titles from a site, you would input:

=IMPORTXML(“https://example.com/blog”, “//h2”)

This function can scrape content like headlines, product details, or other structured elements on a web page.

Leveraging IMPORTHTML for Tables and Lists

The IMPORTHTML function is used for importing data from HTML tables or lists. The function’s format is:

=IMPORTHTML(“URL”, “query_type”, index)

Where “query_type” can be “table” or “list” and “index” specifies the position of the element on the page. This function is ideal for pulling data from pages structured with clear tables or ordered lists.

Extracting CSV Data with IMPORTDATA

If the target data is available in CSV or TSV format, the IMPORTDATA function can pull it directly into Google Sheets. It requires just the URL of the data file, for instance:

=IMPORTDATA(“https://example.com/data.csv”)

This method is straightforward but limited to sources providing data in CSV or TSV formats.

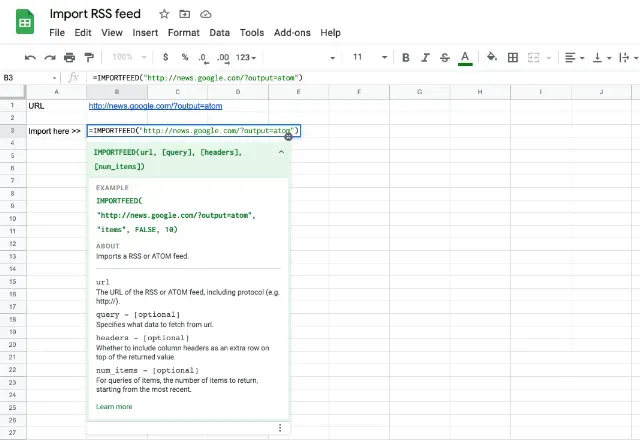

Importing RSS Feeds with IMPORTFEED

The IMPORTFEED function fetches data from RSS or Atom feeds, making it suitable for content like news updates or blog posts. Its usage looks like this:

=IMPORTFEED(“URL”)

The function can be customized further to extract specific elements from the feed.

Merging Data from Multiple Sheets with IMPORTRANGE

To consolidate data from different spreadsheets, use the IMPORTRANGE function. This function connects multiple Google Sheets, allowing data sharing across sheets:

=IMPORTRANGE(“spreadsheet_url”, “Sheet1!A1:B10”)

This feature is useful for combining data from different sources while keeping the sheets linked dynamically.

Limitations of Google Sheets for Web Scraping

While Google Sheets can effectively scrape static data, it struggles with dynamic content loaded through JavaScript or Ajax. Additionally, it may hit rate limits if too many requests are made within a short period. These limitations make Google Sheets unsuitable for scraping large datasets or content requiring complex navigation.

Overcoming Challenges with Advanced Tools

For tasks involving complex scraping requirements, such as handling dynamic content, pagination, or anti-bot measures, a dedicated tool like Octoparse or Bright Data may be a better option. No-code scraping tools simplify data extraction with automated workflows and built-in anti-scraping mechanisms.

Conclusion

Using Google Sheets for web scraping is a beginner-friendly approach, especially for simple data extraction tasks. By mastering functions like IMPORTXML, IMPORTHTML, and others, you can efficiently pull data from various sources. However, for more advanced requirements, exploring specialized tools may be necessary.